With study

week at an end, the midterm is just a day away. I will dedicate this post as a

sort of light review on topics I’ve have not touched on in these blog posts.

It is

important to distinguish the differences between modern and old opengl, as such

understanding how that the GPU access data through buffers and processes it in

parallel; with 1000s of cores vs. the 4 or so cores of the CPU. With shaders,

the once fixed pipeline can now be accessed and programmed specifically to our

needs; no longer do we have to rely on slow CPU based methods. To fully

understand this, we need to understand the graphics pipeline.

Graphics Pipeline

Vertex data

is passed though vertex buffer objects that are sent to the GPU. Setting up

these VBOs allow us to access them in the vertex shader as input by specifying layout (location=attribute index) in vec3

pos. Other data can be passed via uniform variables. In the vertex shader,

a location is passed along by specifying the value to gl_Position. The next

stage, after each vertex is processed is the triangle assembly. In the triangle

assembly, the vertex locations are used to create basic triangles by connecting

groups of 3 vertex and connecting them together – this forms the full object

passed into the vbo. In the next stage, the rasterizer goes through each

triangle and determines whether to clip portions of it depending on if it

visible in screen space. The remaining unclipped portions of the triangle are

converted to fragments. If other data is passed along with the location (normal,

color, uv) the rasterizer will also interpolate between the vertices of the

triangle and assigns each fragments interpolate values of color, normal or uv

coords. The last step is to process each fragment in the fragment shader. Here,

we can are passed along values from the vertex shader and the fragment itself.

In this shader we typically manipulate the color of the fragment to create

different effects such as lighting, can sample values from a texture and map

them using tex coords. When all is done, the data can be outputted in the same

way as layout (location=attribute index) out

vec4 color. Data is sent to an FBO where it can be drawn on screen or

stored for later use (such as a second pass for post-processing effects).

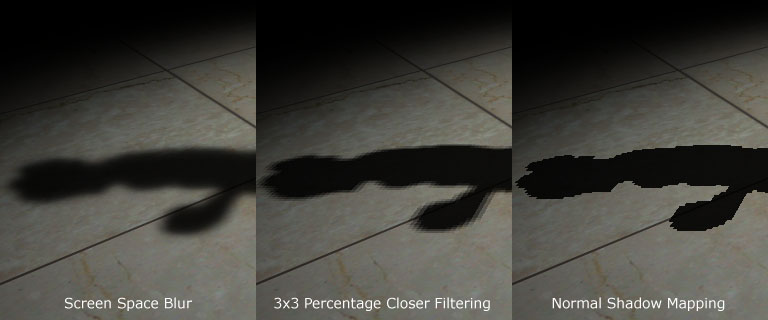

Shadow Mapping

Although I

made mention to how shadow mapping works in previous posts, I never went

through in enough detail or make mention of the math behind it. After the

shadow map is created from the first pass of the light’s perspective, the

second pass is that of the screen’s perspective. From here, we need to map

pixels from this space to that of the shadow map to compare whether a given

pixel is occluded. What we have is the world to light matrix (LTW)

and world to camera matrix/modelview (CTW), what we need

is to transform a pixel from camera space to light space, so LTW

CTW vC . But that doesn’t work as the from and to

do not match LTW CTW vC , what we need instead is LTW

(WTC) vC . To get (WTC), we need

the inverse of the model view matrix CTW-1.